Poking Around

Have you ever been poking around a website, clicking links, or visiting different directories? If you have, you might have come across something interesting or even a webpage that didn’t have a link pointing to it. If you did find sensitive information, that is called an information disclosure.

Information disclosures come in multiple variations, but they are essentially the same thing. Information that should not be disclosed, is out in the open – sometimes for anyone to see and with minimal effort.

OWASP’s Top 10 Application Security Risks marks sensitive data exposure (currently changed to Cryptographic Failures) as number two on their list. In other words, it’s so common that attackers look for passwords, keys, session tokens, and more because it can lead to a successful security breach.

Where to Learn?

I have written about PortSwigger labs before, and I will try again today. PortSwigger is a great website to learn about web application security testing, their tool Burp Suite, and for hands-on labs.

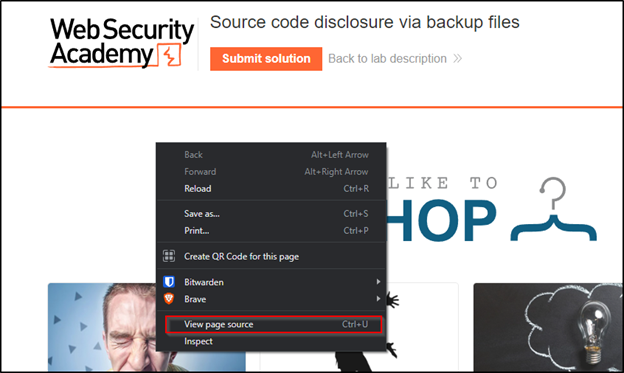

In this example, Port Swigger’s lab, “Source code disclosure via backup files,” we will explore a source code disclosure that is sometimes seen in actual engagements on real websites. But unfortunately, we will also visit another regularly abused example that has seen tremendous success.

When pentesting an application or a webapp, the page source is one of the most common places to check for information. You can right-click and select View page source or use the shortcut Ctrl+U.

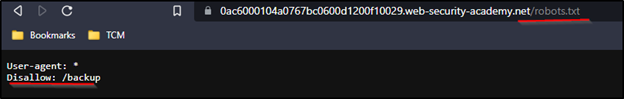

If you don’t see anything of value here, you can always check /robots.txt for any pertinent information.

We can see Disallow: /backup. This robots.txt page is used for telling web crawlers what not to crawl when visiting this website. This is more of a request than enforcement since some web crawlers don’t care and will crawl or scan anyway.

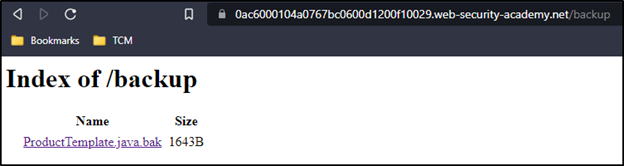

Seeing that there is a /backup directory, we will visit that endpoint to see what can be seen.

We see an unsecured backup file. At this point, there are a few things wrong already. One is the /backup endpoint marked in the robots.txt file. This not a good way stopping web crawlers from scanning specific directories. Another is the backup directory is accessible without any authentication. The directory should not even be accessible via the web application.

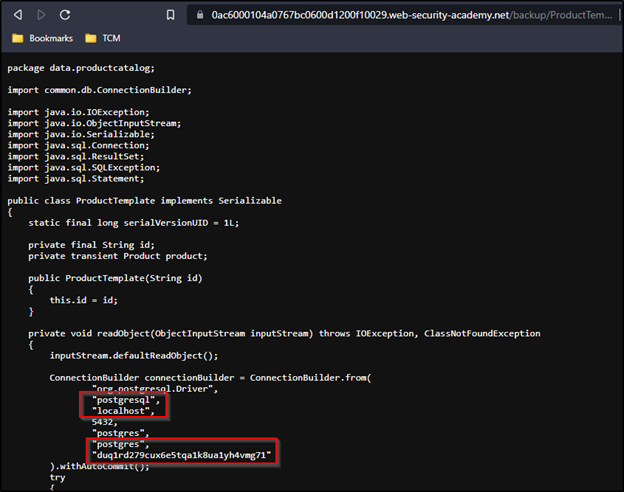

Finally, as we will see, the file itself contains plain-text credentials to a database.

This is poor security. Not only do we see the database credentials in plain text, but we also see what kind of database the webserver uses. This could result in keys to the kingdom.

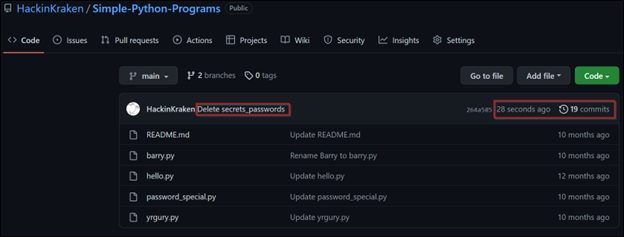

Let’s take a step away from PortSwigger labs and briefly discuss how GitHub repositories can also be grounds for information disclosure. Often, an organization with lots of developers will accidentally expose information that was never meant to be exposed. For example, a developer might use her own personal GitHub repo to store code or even use the company GitHub repo to do the same. There are tools like Truffle Hog that are great at finding leaked credentials.

Don’t forget about commit history!

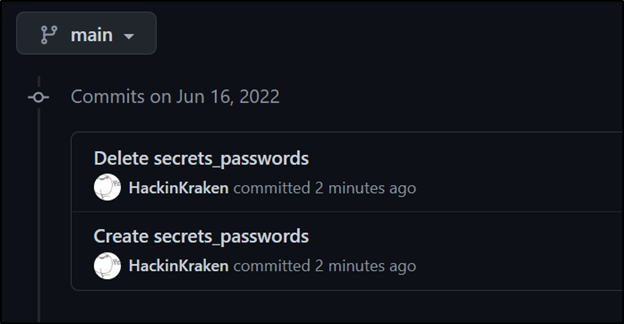

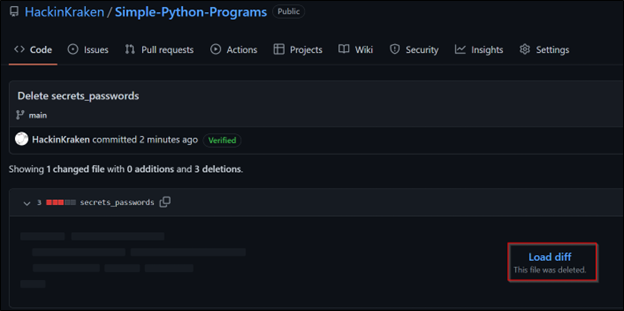

Even if the developer realized her mistake and removed the credentials, there is still a copy in the commit history.

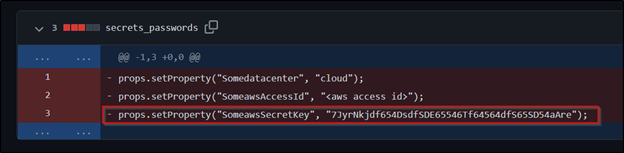

We see that even though the file was deleted from the repo, the commit history saves two copies. One is the file creation, and the other is the deletion. At this point, the best thing to do is immediately change the AWS secret key and train the developer on security best practices and awareness.

How to Prevent Sensitive Information Disclosure

The following is an excerpt from OWASP regarding the minimum you can do to prevent information disclosures.

- Classify data processed, stored, or transmitted by an application. Identify which data is sensitive according to privacy laws, regulatory requirements, or business needs.

- Don’t store sensitive data unnecessarily. Discard it as soon as possible or use PCI DSS compliant tokenization or even truncation. Data that is not retained cannot be stolen.

- Make sure to encrypt all sensitive data at rest.

- Ensure up-to-date and strong standard algorithms, protocols, and keys are in place; use proper key management.

- Encrypt all data in transit with secure protocols such as TLS with forward secrecy (FS) ciphers, cipher prioritization by the server, and secure parameters. Enforce encryption using directives like HTTP Strict Transport Security (HSTS).

- Disable caching for response that contain sensitive data.

- Apply required security controls as per the data classification.

- Do not use legacy protocols such as FTP and SMTP for transporting sensitive data.

- Store passwords using strong adaptive and salted hashing functions with a work factor (delay factor), such as Argon2, scrypt, bcrypt or PBKDF2.

- Initialization vectors must be chosen appropriate for the mode of operation. For many modes, this means using a CSPRNG (cryptographically secure pseudo random number generator). For modes that require a nonce, then the initialization vector (IV) does not need a CSPRNG. In all cases, the IV should never be used twice for a fixed key.

- Always use authenticated encryption instead of just encryption.

- Keys should be generated cryptographically randomly and stored in memory as byte arrays. If a password is used, then it must be converted to a key via an appropriate password base key derivation function.

- Ensure that cryptographic randomness is used where appropriate, and that it has not been seeded in a predictable way or with low entropy. Most modern APIs do not require the developer to seed the CSPRNG to get security.

- Avoid deprecated cryptographic functions and padding schemes, such as MD5, SHA1, PKCS number 1 v1.5 .

- Verify independently the effectiveness of configuration and settings.

Wrapping Up

We saw a couple of example variations of information disclosures that have been seen in real security breaches. As simple or low-skill information disclosures are, they are one of the most abused vulnerabilities and compromise entire company networks. Reduce your exposure by getting a professional pentest. Contact TCM Security for more information, and let us help you secure your organization.

Links

PortSwigger Web Academy

Port Swigger Burp Suite

OWASP Top 10

Truffle Hog

Author:

This blog was written by Steven Amador.

About TCM Security

TCM Security is a veteran-owned, cybersecurity services and education company founded in Charlotte, NC. Our services division has the mission of protecting people, sensitive data, and systems. With decades of combined experience, thousands of hours of practice, and core values from our time in service, we use our skill set to secure your environment. The TCM Security Academy is an educational platform dedicated to providing affordable, top-notch cybersecurity training to our individual students and corporate clients including both self-paced and instructor-led online courses as well as custom training solutions. We also provide several vendor-agnostic, practical hands-on certification exams to ensure proven job-ready skills to prospective employers.

Pentest Services: https://tcmdev.tcmsecurity.com/our-services/

Follow Us: Blog | LinkedIn | YouTube | Twitter | Facebook | Instagram

Contact Us: sales@tcm-sec.com